Pretty famous in the past in the research and academic sector, the Andrew File System better known as AFS, continues to drive its own trajectory to control its destiny.

AFS was invented at Carnegie Mellon University in Pittsburgh, Pennsylvania in the 1980’s, to solve challenges with centralised file services in a distributed environment. As AFS delivered good first results, some people left and launched a dedicated company around this, called Transarc.

Transarc Corporation operates as the commercial arm of the project, and was purchased by IBM in 1994. From here, it became the IBM Transarc Lab in 1999, and then the IBM Pittsburgh Lab in 2001, before being consolidated in Raleigh, N.C. During that period, IBM decided to clone AFS into OpenAFS in 2000, and offer the code to the open source community.

Predicting the future of the cloud and open source

Interestingly, we found that one of the founders of Transarc, and thus of AFS, is Michael Kazar, who later co-founded and was CTO of Spinnaker Networks (acquired by NetApp in 2004). More recently, he served as co-founder and CTO of Avere Systems (acquired by Microsoft in 2018). In 2016, Kazar received the ACM Software System Award for his work on the development of AFS.

To refresh our readers, let’s summarise the role of AFS within an IT environment. The first idea is to share files among a large number of machines, some of them being servers with data stored on them, while others are clients that access and consume the data. Both of them can of course create and generate new data. Having said that, we understand why it fits perfectly in large horizontal environments such universities, research centres and, more globally, academia sites.

The main characteristics of AFS and derivative products are the location independence of data around /afs materialising a global virtual namespace across servers and clients, the strong and strict security mechanisms powered by Kerberos authentication, and the multi-platform availability. All data is propagated across machines by replication, meaning that files are remote copied to consumer machines. Each file is entirely copied and acts as a cached copy. This caching behaviour is a main attribute of AFS, and helps sustain a pretty good level of performance. Subsequent requests on the same file are honoured by the local copy. All changes applied to local data are sent back transparently to central servers where the master copy lives via a subtle callback mechanism.

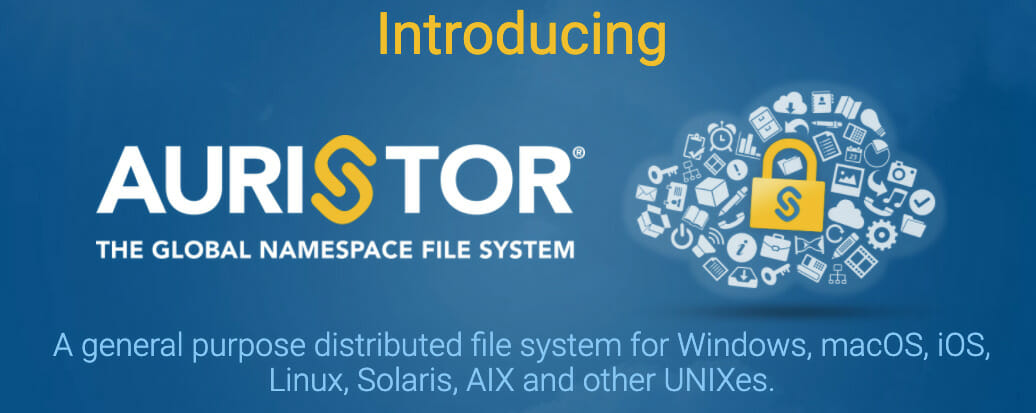

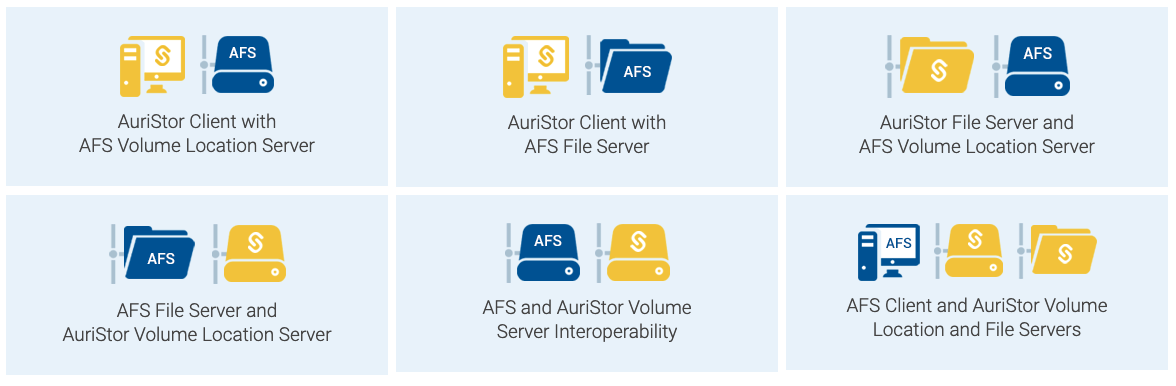

This is especially the case with AuriStor, a US company met recently during the 40th IT Press Tour, which serves as the developer and maintainer of the solution. Auristor took the lead in the development and promotion of the new AFS wave with their AuriStorFS system, inviting AFS users to migrate to AuriStorFS without any data loss and with limited downtime, and receive several key improvements in performance, scalability, and security. These include:

- network transfer of up to 8.2 GB/s per listener thread;

- increased file server performance, as the AuriStor environment can serve the load of 60 OpenAFS 1.6 file servers;

- better UBIK management, mandatory locking, per file ACLS;

- new platforms such MacOS, Linux, iOS, along with the recent validation with Red Hat. This distribution allows for more flexible deployment models.

More information on these feature differences can be found here.

“AuriStor has delivered an impressive list of improvements in performance, security and scalability that make AuriStorFS a serious alternative to classic approaches,” said Philippe Nicolas, analyst at Coldago Research.

The other key value of AFS globally, and AuriStor in particular with all these improvements, is its wide multi-continent deployment capability, which is unique on the market. The cloud has evolved operations for several years with the easy propagation and proliferation of data, but this aspect is completely hidden, as it is operated by cloud entities rather than the client side machines. Other caching techniques with star topology are set to leverage cloud object storage and edge caching filers, exposing industry standard file sharing protocols. This would mean that AuriStor plays a prominent role in distributed computing use cases.